RCinFLA

Solar Wizard

- Joined

- Jun 21, 2020

- Messages

- 3,562

I understand the battery a bit better now. 'Tubular' refers to how the positive plate is constructed. Its primary benefit is to reduce positive plate grid oxidation corrosion which increases battery internal impedance. Other thing about these particular batteries is they use a more dilute acid concentration for electrolyte (28% versus normal 33%) which is the primary way to increase life of lead-acid battery, but not without downside of 20% less capacity for size, higher cell impedance, and biggest issue is battery capacity will drop significantly with any sulfation that locks up some of the sulphur so it cannot be recharged back to sulphuric acid and results in a very low density sulphuric acid in electrolyte.

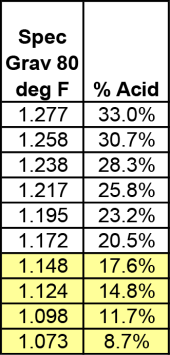

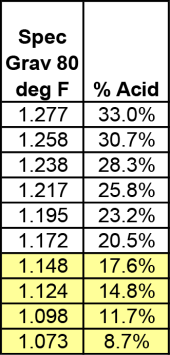

Assuming your amperage measurement of about 30-35 A of battery current is accurate, having only about 1.5 hours of discharge before depletion of battery says battery capacity is only yielding about 50 AH's. My guess is your specific gravity of electrolyte has dropped to very low level due to insufficient charging and resulting sulfation of negative plates. If you can, get a float based hydrometer and measure it. Make sure the electrolyte level is above top of plates at its recommended level. SG spec for these batteries is 1.24( 28.5% acid concentration) when fully charged. This is lower than most flooded lead acid batteries which have SG about 1.28 (33% acid concentration).

This is why the representative probably recommended equalization in an attempt to reduce the sulfation. It will burn off a lot of electrolyte water so make sure you check electrolyte level and add distilled water as necessary.

I have doubts that the batteries can be recovered.

If equalization does not improve battery, last ditch option would be to increase electrolyte acid concentration by removing some existing electrolyte and replacing with some concentrated sulphuric acid to get SG up to 1.24-1.27. This will give the battery a little 'adrenaline' shot.

Assuming your amperage measurement of about 30-35 A of battery current is accurate, having only about 1.5 hours of discharge before depletion of battery says battery capacity is only yielding about 50 AH's. My guess is your specific gravity of electrolyte has dropped to very low level due to insufficient charging and resulting sulfation of negative plates. If you can, get a float based hydrometer and measure it. Make sure the electrolyte level is above top of plates at its recommended level. SG spec for these batteries is 1.24( 28.5% acid concentration) when fully charged. This is lower than most flooded lead acid batteries which have SG about 1.28 (33% acid concentration).

This is why the representative probably recommended equalization in an attempt to reduce the sulfation. It will burn off a lot of electrolyte water so make sure you check electrolyte level and add distilled water as necessary.

I have doubts that the batteries can be recovered.

If equalization does not improve battery, last ditch option would be to increase electrolyte acid concentration by removing some existing electrolyte and replacing with some concentrated sulphuric acid to get SG up to 1.24-1.27. This will give the battery a little 'adrenaline' shot.

Last edited: