stienman

Mostly Harmless

- Joined

- Jan 6, 2021

- Messages

- 476

I've been trying to follow along, but I'm not certain why all the speed and accuracy are needed.

To determine internal resistance you only need two data points, not 300+ per second.

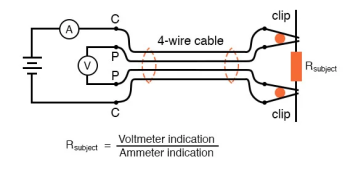

Measure the current on the entire pack, and simultaneously measure the voltage on each cell. Do this twice for the two data points, and you can determine the cell resistance assuming the two measurements were of different currents.

Further, IR doesn't change wildly. You can do one measurement per day and have plenty of advance warning that a cell, or its connections, needs to be checked further.

Yes, it's not the "ideal" 1kHz AC internal resistance check, but it's a lot more realistic in terms of how much energy a cell is consuming vs storing/discharging. Quite frankly the 1kHz IR measurement doesn't have a lot of value, and is only used because it results in a misleadingly low number that doesn't actually represent energy loss within the cell under real world conditions.

I could be way off base here, perhaps someone can help me understand the difference between a DC IR measurement and the AC measurement everyone seems to believe is the gold standard?

To determine internal resistance you only need two data points, not 300+ per second.

Measure the current on the entire pack, and simultaneously measure the voltage on each cell. Do this twice for the two data points, and you can determine the cell resistance assuming the two measurements were of different currents.

Further, IR doesn't change wildly. You can do one measurement per day and have plenty of advance warning that a cell, or its connections, needs to be checked further.

Yes, it's not the "ideal" 1kHz AC internal resistance check, but it's a lot more realistic in terms of how much energy a cell is consuming vs storing/discharging. Quite frankly the 1kHz IR measurement doesn't have a lot of value, and is only used because it results in a misleadingly low number that doesn't actually represent energy loss within the cell under real world conditions.

I could be way off base here, perhaps someone can help me understand the difference between a DC IR measurement and the AC measurement everyone seems to believe is the gold standard?