I'm in the process of designing a solar system for a van and it will include lifepo batteries and 3 charge sources (solar, alternator, inverter/charger).

I'm going with victron equipment and trying to educate myself on how to configure the various charging sources to best charge the batteries to ensure maximum service life. They all seem to be using "traditional" phases of bulk, absorption, and float based on voltage and time.

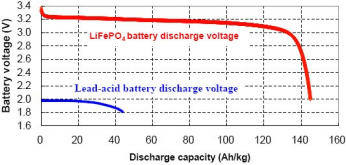

With lifepo voltage being so flat across the bulk of it's SOC, I don't understand how these charging sources can optimize the charging cycle when voltage isn't a great indicator for SOC. For example, how does the inverter know when the solar is also making big contributions to charging so that it reduces the duration of bulk or absorption? Shouldn't those stages be shorter if more amps are being pushed from multiple sources? I assume battery voltage will drive adjustment at the extremes, but that doesn't seem great with the the flat voltage curge on lifepo. If the charger isn't constantly looking at net charge on the battery across all charge sources (and considering loads as well), I don't see how it could know how long to apply charge at a given voltage. As an example, I might have the inverter charger configured for 1 hour of absorbtion time, pushing 50 amps to the batteries. Does that 1 hour get adjusted down when solar is pushing another 50 amps at the same time? Does it get adjusted longer when there is 95amps of load offsetting most of the charging (so really only charging 5 amps "net)?

Assuming you have an accurate shunt on the system, wouldn't the % state of charge for the battery be a key input to how charging volts and amps should be optimized? I'm not saying the system should ignore voltage completely, but it just seems like SOC woudl be a better "primary" driver to optimize the chargers for the bulk of the charge cycle. It seems like it woudl be pretty simple to allow the shunt to determine state of charge and all the charging sources could adjust voltage/current accordingly with some sanity checks for battery voltage to override when neccessary.

All that said, I'm pretty new to this stuff and just designing my first system now. I'm probably overthinking this, but everything I read about lifepo and charging gets me confused, expecially when I see fixed timeframes defined for the various charge phases regardless of number of sources. Is my line of thinkikng flawed? Is there something happening in these systems I'm not considering? Just trying to get educated on this stuff the best I can at this point.

I'm going with victron equipment and trying to educate myself on how to configure the various charging sources to best charge the batteries to ensure maximum service life. They all seem to be using "traditional" phases of bulk, absorption, and float based on voltage and time.

With lifepo voltage being so flat across the bulk of it's SOC, I don't understand how these charging sources can optimize the charging cycle when voltage isn't a great indicator for SOC. For example, how does the inverter know when the solar is also making big contributions to charging so that it reduces the duration of bulk or absorption? Shouldn't those stages be shorter if more amps are being pushed from multiple sources? I assume battery voltage will drive adjustment at the extremes, but that doesn't seem great with the the flat voltage curge on lifepo. If the charger isn't constantly looking at net charge on the battery across all charge sources (and considering loads as well), I don't see how it could know how long to apply charge at a given voltage. As an example, I might have the inverter charger configured for 1 hour of absorbtion time, pushing 50 amps to the batteries. Does that 1 hour get adjusted down when solar is pushing another 50 amps at the same time? Does it get adjusted longer when there is 95amps of load offsetting most of the charging (so really only charging 5 amps "net)?

Assuming you have an accurate shunt on the system, wouldn't the % state of charge for the battery be a key input to how charging volts and amps should be optimized? I'm not saying the system should ignore voltage completely, but it just seems like SOC woudl be a better "primary" driver to optimize the chargers for the bulk of the charge cycle. It seems like it woudl be pretty simple to allow the shunt to determine state of charge and all the charging sources could adjust voltage/current accordingly with some sanity checks for battery voltage to override when neccessary.

All that said, I'm pretty new to this stuff and just designing my first system now. I'm probably overthinking this, but everything I read about lifepo and charging gets me confused, expecially when I see fixed timeframes defined for the various charge phases regardless of number of sources. Is my line of thinkikng flawed? Is there something happening in these systems I'm not considering? Just trying to get educated on this stuff the best I can at this point.