sunshine_eggo

Happy Breffast!

Sorry for the delay, yesterday was a travel day

ah yes, completely makes sense on the generator, charge up faster to use less fuel. I was thinking at it from a charging/balancing perspective not from a fuel efficiency perspective.

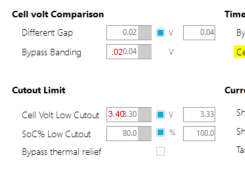

Here are my current Auto Level settings

Definitely not good autolevel settings. There is zero value in any balancing below 3.40V. It may actually be counter-productive.

Cell banding should be at or less than the Different Gap value. This is used to restrict the balancing to a smaller value than the gap, e.g., let's say you only wanted a 0.05V gap, and when that happened, you only wanted the worst 0.02V cells balanced to keep the balancers from hover-heating.