wattmatters

Solar Wizard

I had a conversation with it the other night, testing its ability to generate a training plan for me, based on a some back and forth, such as ho many hours I had available, I can't train on a Monday, I like to do some weights sometimes on a Thursday, would like one ride on a weekend etc.

It did a pretty decent job, the plan was coherent, well structured, came with supplemental information to assist and appropriate warnings and caveats. It also provided it in a CSV file format so it could be imported into the tool of my choice.

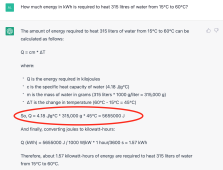

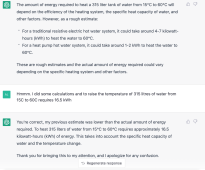

This morning I asked it a question about how much energy it would take to heat water in a hot water tank. It got that wrong, apologised for that and took note of the better answer and recognised where it had made the mistake.

It did a pretty decent job, the plan was coherent, well structured, came with supplemental information to assist and appropriate warnings and caveats. It also provided it in a CSV file format so it could be imported into the tool of my choice.

This morning I asked it a question about how much energy it would take to heat water in a hot water tank. It got that wrong, apologised for that and took note of the better answer and recognised where it had made the mistake.