After reading and searching both here and in Victron community forums for an answer i am still clueless.

I have noted many users reporting deviations from what the app shows compared to the actual readings on battery as well as scc terminals and there seems to be a deviation of 0.1V to 0.3V, which in the case of lithium can be significant.

These reports go way back to 2017 while others are still valid today, so i believe that the firmware part is (almost) irrelevant.

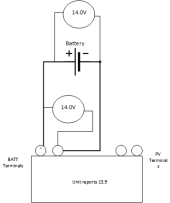

My setup is dead simple: Amperetime 12v 50Ah Battery + Victron smartsolar 100/20.

Nothing else connected/attached to this system so far. The cable that connects Battery and SCC is only 20cm 6mm2.

Three different voltmeters show 13.36V at battery terminals, also same value on SCC Batt terminals.

But app shows 13.29V.

Solar panel 100W then connects to SCC and starts charging, with custom settings set to 13.8V bulk/termination voltage.

When it reaches the set point, battery and scc terminals show 14.1-14.2V and the app shows 13.8V.

Is this behavior normal for Victron controllers? Does anyone have a suggestion/solution?

I have noted many users reporting deviations from what the app shows compared to the actual readings on battery as well as scc terminals and there seems to be a deviation of 0.1V to 0.3V, which in the case of lithium can be significant.

These reports go way back to 2017 while others are still valid today, so i believe that the firmware part is (almost) irrelevant.

My setup is dead simple: Amperetime 12v 50Ah Battery + Victron smartsolar 100/20.

Nothing else connected/attached to this system so far. The cable that connects Battery and SCC is only 20cm 6mm2.

Three different voltmeters show 13.36V at battery terminals, also same value on SCC Batt terminals.

But app shows 13.29V.

Solar panel 100W then connects to SCC and starts charging, with custom settings set to 13.8V bulk/termination voltage.

When it reaches the set point, battery and scc terminals show 14.1-14.2V and the app shows 13.8V.

Is this behavior normal for Victron controllers? Does anyone have a suggestion/solution?