Yes, an MPPT is more efficient, and you get more out of the panels than with a simple relay, if that is what you are asking. Also, 2 panels in series with an MPPT gives you a slightly longer day, as the controller will "turn on" earlier in the day, and "off", later in the evening.

An MPPT is not always more efficient. You'll notice they generally have heat sinks, that means they throw away energy. The SSR's I have produce almost no heat. One needs to prove that the MPPT will be worth the $ and it depends upon the use case, batteries, temperature, and the panels.

If you are not grid tied, then you probably have excess power most of the time so what is the point of blowing money to squeeze out a bit more sometimes? For example, this past week at Steamboat rock, there's no way I could use all the power, so an MPPT would be a total waste of $. If it was during a heat wave my cells won't keep up with the A/C. I will find an outlet. There is a huge range of temperatures and weather where I have no need for all the power, and there is a very thin range of weather where the MPPT will make a difference.

It is much easier to justify the expense and complexity of an MPPT if you are grid connected and thus never wasting sunlight and you gain something by not matching the cells to the battery. I am not grid connected. I am throwing away power at a furious rate. I don't need to throw away money on an MPPT.

A spinning Earth will not provide a CV absorption charge. The only way to do that is with a controller that supports an absorption phase.

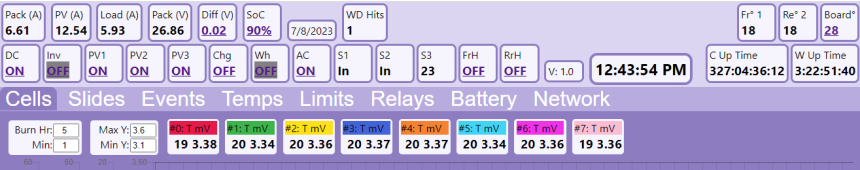

The Sun's power nicely tapers off every day. Whenever my BMS is triggered to bring the pack to 100%, it nicely monitors every cell's voltage and the pack current and ensures it does not over charge any cell, and properly declares the pack 100% full when one cell reaches 3.5 and the amps are less than X.

It makes no sense that somehow the free tapering the Sun does every day is bad but yet manages to supply the power to enable the charge controller to do some pristine tapering. If you have the excess power to flawlessly taper, then you don't need an MPPT, you are throwing away sunshine.

Most BMS's don't have a way other than SOC/coulomb counting to know if a battery is fully charged. Voltage alone won't tell you that. You can overcharge an LFP at lower than 3.65Vpc.

I agree you can overcharge one or more of the cells by charging a pack to 3.65xN (where N is the number of cells), but I don't agree that you can overcharge a cell by ensuring it stays lower than 3.65V. Additionally, I don't see the point of ever going to 3.65V. The voltage rises exponentially after about 3.4, so I set mine to stop at 3.5.

Yes, I totally agree that most BMS's and charge controllers suck because they cannot monitor SOC, and thus relentlessly bring the battery to 100% every day. My BMS does monitor SOC and does stop at n% and thus rarely ever brings any cell past 3.4V.

A charge controller that monitors tail current will do a better job at stopping charge at the correct time.

What is the "correct time"? What is "better job"? Again, my BMS always prevents any cell from exceeding xV. It is correct to stop charging if any one cell hits x (I set my x to 3.5V). Are you arguing that it's better to maintain steady decreasing amps, instead of a more on/off with decreasing amps? Ok, then what are you doing to ensure the Sun keeps shining to maintain that? And given that the BMS/Charger doesn't monitor SOC and thus doesn't let you stop at x%, then this is happening every day. And again, if the controller can do this, it must have excess power and thus the MPPT functionality is rather pointless.

Setting the absorption voltage lower with a longer absorption time gives the BMS more time to balance the cells before the LFP is fully charged.

My BMS will turn on the balance resistor that drains a given cell's power at any time, not just while charging and near the top. The BMS monitors what cell arrived at the max and gives that cell a haircut so that a different cell arrives at the peak next time.

Do you really know how balanced the cells are? Do you know whether or not one cell is always getting to the top before the others, and thus that cell is taking abuse?

I assume these plug n play Li batteries like battleborn, will stop the charge when one cell has max V, but still allow the loads. If that is true, do you know how often this is happening? You'd have to monitor the charge controller to see that it is proving amps that are all going to the loads, yet the amps are not flowing into the battery.

It also (according to many) extends the life of the battery by fully charging it without reaching 3.6V. That is why the settings in an MPPT are adjustable, to tweak that how the user wants that to happen.

It extends the life of a lead acid battery to keep at at 100%, I am not aware that it extends LiFePo battery life to do this. My understanding is that the more often it is above say 3.4 (or below whatever), the more stress you are putting on it.

If the MPPT was just charging as hard as it can until the BMS stops it, you would be charging in bulk mode until 3.65V was reached, with no absorption. That is hard on an LFP battery. It's much better to hold an absorption voltage of 3.5ish until the LFP is fully charged, without ever getting to 3.56V

Same as above.

MPPT controllers are cheap. PWM even cheaper. I'm not sure what you would be gaining by making a cheaper one. Less reliability?

A SSR is even cheaper and even more reliable. An MPPT with a simple SSR type circuit to follow the on/off command of the BMS is more reliable than one with a microprocessor that can only measure pack voltage.

If I want to try out an MPPT, then I have to find one that does not rely on a microprocessor and communication to shut off, because I do not have an expensive and thus unreliable BMS that runs all the amps through it.

We do not gain reliability with more complex circuits and big mosfets.