Important, the discharge or charge current should be relatively steady. Use a clampmeter (keep in mind +-2% accuracy) to check the current and voltage. Then compare values with pzem and bms. Also check current before and after bms and before and after pzem to check if there is something unusual.@Vi s hi I started the process today, after charging my battery to the max and let it rest for a night, I discharged it by 20% (40Ah). I used my PZEM and the BMS to get the Ah value, but the problem is they are not telling the same. While I reached the 40Ah with the PZEM, the BMS only shows 34Ah. I don't know which one to trust, I chose the PZEM and stopped the experiment there for this first step.

That's strange they don't give the same value. Plus, while discharging the battery with a hair dryer, the instant current shown by the BMS was 42A, and 48 with the PZEM. That's the same 6A difference as I had with the Ah.

Do you know what could cause this please?

The hair dryer was plugged into an inverter which was connected to the PZEM"s shunt like all the rest.

Also after one hour or so, the BMS was at 50-60 degrees celcius (120-140 Faraneiht) I don't know if it's a normal value that's the first time I use it with such a high current.

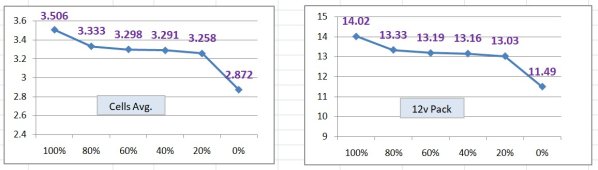

I now let the cells rest and I'll check their voltage tomorrow.

Thanks

EDIT : I forgot to do the capacity test, I'll probably discharge the whole battery tomorrow to do it

Bms should be more accurate above 5w, below it doesn't show nor count.

Pzem and bms have different refresh rates so it's normal they don't show the same values in case of an inrush current or brief current fluctuations.

50 to 60° is still acceptable, not yet too high.

Yes you should do the capacity test before. Nevertheless you can check and compare your measurement instruments while discharging to find out which are most accurate (any meter is at best 98% accurate).