SIdmouthsteve

New Member

- Joined

- Jun 30, 2022

- Messages

- 22

I have been looking at my overall efficiency for tariff shifting (charging in the early morning and powering the house from stored charge).

Comparing the power from the mains used to charge my Growatt LifePO4 batteries with the power provided to the house by the batteries shows that for every kWh of charging I get .75 kWh of power. The overall efficiency through the charger, battery and inverter is about 75%.

The 8.5p per kWh that enters my system is actually 11.3p by the time it is used. This is still a very good rate for electricity (Octopus standard daytime charge is 28.5p a kWh).

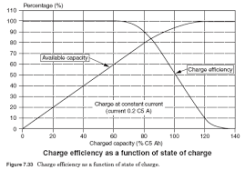

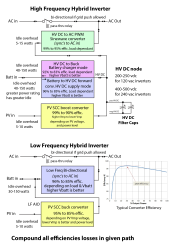

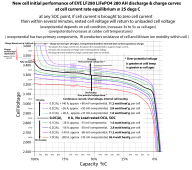

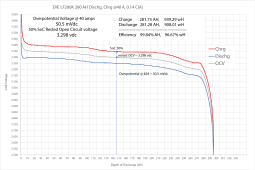

I am getting about 90% efficiency at each stage. The Growatt SPF5000 inverter is rated at 93% efficiency, the battery charger in the inverter is probably about 90% efficient (I am charging to 90% SOC - efficiency would be better at 80% SOC) and the 4 year old LifePO4 battery stack is probably 95% efficient. 90% sounds good but 0.9*0.9*0.9 is 73% .

.

Roll on summer! Free electricity from the solar panels again

Happy Xmas.

NOTE: The discussion below suggests we should expect 80-90% efficiency. My efficiency was lower than should have been expected and I will check the metering etc.

PS: The 60W idle power demand from the inverter doesn't help. My figures were based on metered power in against metered power out so the exact efficiency figures for each stage were educated guesswork. Anyone got exact data?

Comparing the power from the mains used to charge my Growatt LifePO4 batteries with the power provided to the house by the batteries shows that for every kWh of charging I get .75 kWh of power. The overall efficiency through the charger, battery and inverter is about 75%.

The 8.5p per kWh that enters my system is actually 11.3p by the time it is used. This is still a very good rate for electricity (Octopus standard daytime charge is 28.5p a kWh).

I am getting about 90% efficiency at each stage. The Growatt SPF5000 inverter is rated at 93% efficiency, the battery charger in the inverter is probably about 90% efficient (I am charging to 90% SOC - efficiency would be better at 80% SOC) and the 4 year old LifePO4 battery stack is probably 95% efficient. 90% sounds good but 0.9*0.9*0.9 is 73%

Roll on summer! Free electricity from the solar panels again

Happy Xmas.

NOTE: The discussion below suggests we should expect 80-90% efficiency. My efficiency was lower than should have been expected and I will check the metering etc.

PS: The 60W idle power demand from the inverter doesn't help. My figures were based on metered power in against metered power out so the exact efficiency figures for each stage were educated guesswork. Anyone got exact data?

Last edited: