Sorry for late reply.

Absolutely there was "prior work" in my 'core systems' which helped me alone the way.

You are not far off from what I have, by the sounds of it.

On Paho. If you look into my code base for the mqtt-lib you will see how I wrap it in something a bit more "fit for purpose".

The tricky part to wrap your head around with MQTT in general is that it leans heavily towards the "asynchronous event model". This presents some interesting things which, if not aware of, will cause horrendous amounts of confusion and concurrency issues with state.

The idiom of "If this then that" is rather easy to envision and implement with an "on_message" handler. It is when you come to "If this and another thing, for at least 2 minutes, then that" or "two minutes after this do that, unless this happens" it starts to bend your head and brings those "interesting things" out to play. In the event model, events may arrive effectively concurrently. Depending on some settings they may even arrive out of order. Messages get missed, messages get resent, dropped, queued, delayed, messages get repeated over and over. Any attempt to keep state in a sane and consistent fashion, especially when handling things like service restarts or full reboots... involves serious engineering.

So I treat state like a hot potato. Assume you don't know it in advance and do something sensible.

Consider this simple(?) scenario:

You want to increase your solar charge limiter, if and only if the battery balancer is idle. You can handle both messages from the solar charger and from the balancer. The trouble is, no single message context contains both data.

Why not ask the balancer? Assume you don't know it's current state, so ask it via it's MQTT get API. This introduces one of the hardest things to do right on an async message bus. The simple Request/Response flow is actually non-trivial to implement.

The trick I used, was to simply cache the last message on all subscribed topics. The only event my automations actually receive is a notification that the cache has changed for a topic. It is then completely up to them if they are even interested in responding to that topic and if they are how to respond.

My lights for example subscribe to both the motion sensors, but also the MPPT output watts for the solar. It does not respond to any changes in the later, but when motion is detected it will check the "last on topic" for the solar panel power and decide if the lights are required or not.

"If this for 2 minutes, then that" I solve by flipping them around.

"Unless told otherwise do this for or in 2 minutes"

Say a temperature change ends up causing the radiator to need switching on. I put a "timeout" on the request for the radiator of 3 minutes. If nothing resets that "timer" the automatics will turn the radiator off. Doing it for the inverse is just as easy, but harder to explain. Basically it's about publishing "future intentions", like publishing "calender reminder items" with specific dates and actions which something will watch over.

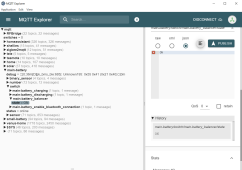

On the MQTT abstraction the code is here:

GitLab.com

gitlab.com

Specifically the MqttMultiTopicObservableCache class

If you have a scenario where you MUST process each and every message in turn, then the appropriate abstraction is the MqttTopicQueue class

On implementing services using this, look at something very basic like the "MPPT Totaler" service here:

GitLab.com

gitlab.com

In general I recommend taking the "Micro-service" concept seriously. Keep your python services small. If you have a more complicated process to handle, it is often better to split it into several smaller services responding to events from each other. This can also be seen in the above, where the MPPT total isn't available on the bus, so I wrote a service to calculate it. Then a second service to respond to that and the battery BMS and a third to control the actual panel limiters.

Note. Doing this, adding a python micro-service layer, does not prevent your HA setup from working as is, and can even intergrate between your own services and HA easily via the MQTT intergrations