solarsimon

Solar Enthusiast

- Joined

- Oct 9, 2020

- Messages

- 187

I'm looking to deploy Home Assistant and a bunch of other things to sit along side it to allow a cocktail of control & monitoring activities across a farm. There will be a mix of off-the-shelf devices plus Arduino sensors etc.

My goal it to start off with an architecture that I hopefully won't regret. I don't mind learning. If there's a script for deploying something vs a bunch of steps, I'll typically do the steps individually so that I get a better feel for what's under the skin so I stand a better chance of fixing it when it goes wrong.

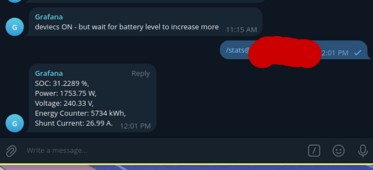

I think my minimum feature set includes: Home Assistant, Solar Assistant, Mosquito, Node Red, InfluxdB, Grafana. (please tell me other useful ones)

My starting point is an i5 HP pc that I've got running Proxmox as a hypervisor, allowing me to create Virtual Machines in which I can sit Home Assistant and other services. I'm leaning towards running Docker in one of the VMs and stuffing most of the various elements into individual Docker containers in the hope that it's simpler.

I'd really appreciate any thoughts people have on this proposed architecture & what best practices to follow so I'm less likely to hit a dead end.

Many thanks.

My goal it to start off with an architecture that I hopefully won't regret. I don't mind learning. If there's a script for deploying something vs a bunch of steps, I'll typically do the steps individually so that I get a better feel for what's under the skin so I stand a better chance of fixing it when it goes wrong.

I think my minimum feature set includes: Home Assistant, Solar Assistant, Mosquito, Node Red, InfluxdB, Grafana. (please tell me other useful ones)

My starting point is an i5 HP pc that I've got running Proxmox as a hypervisor, allowing me to create Virtual Machines in which I can sit Home Assistant and other services. I'm leaning towards running Docker in one of the VMs and stuffing most of the various elements into individual Docker containers in the hope that it's simpler.

I'd really appreciate any thoughts people have on this proposed architecture & what best practices to follow so I'm less likely to hit a dead end.

Many thanks.