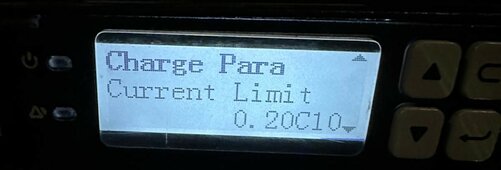

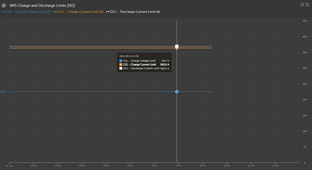

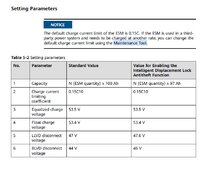

I checked the current limit set in the BMS device have below the right rack. It says the current limit is .20C10 ( image below). Next, the current limit shown on the datasheet I included in other comments in this thread showing a normal rating of .2C (first page and also in title of charge curve). I can't dispute the math that 15x8 = 120, and I'm rooting for this to be the issue as I'm hoping increasing the current limit is simple and will immediately increase my charge limit. 1) Other than that math, what did you find in the literature to suggest the current limit is 15amps and not 20amps?

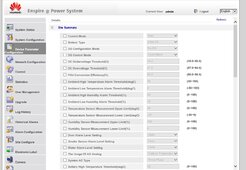

The link I provided was to a huawei forum. The information was linked by a moderator to that forum.

Next, I'm 90% sure I understand that you are saying the voltage, when charging, should be very similar at the charger controllers, battery terminals, and reported by the BMS.

Mostly true, but there's a twist.

Chargers direct measured voltages will always be higher, but it should be a small delta unless wire/connection resistance is too high. With DVCC/SVS enabled, the Lynx shunt is passing it's voltage measurement to all GX connected chargers, so losses through their wiring are not considered.

In your case, the shunt voltage is the "master" for all victron hardware. Since the shunt is not measuring a voltage influence by current, it SHOULD measure nearly exactly the same as the BMS.

It took me a minute to realize that the voltage difference was actually INSIDE the battery.

So, to summarize, with DVCC enabled and SVS enabled the shunt voltage should be nearly identical to the BMS voltage as the shunt is measuring the true voltage at the battery terminals and passing it to all Victron chargers. Any difference between BMS and shunt is either simply error, slight resistance issues or the BMS boost function.

To help put the nail in the coffin for my understanding, I would like to propose a hypothetical example and get your confirmation please. Looking at the charge curve on page 2 of the datasheet, if I'm at 100 mins of charge time (.2C, 35 Celsius), the charge voltage should be 50v. 2) Therefore, regardless of what current the batteries are getting charged at, the voltage measured at the charge controllers and battery terminals should be close to 50v?

100 minutes of charge time is measured from a completely empty battery. If you start at 20% charge, you would start at roughly the 60 minute mark, and your 100 minutes of charging would correspond to a voltage value at the 160 minute mark.

You had it mostly right until the last sentence. When you said "regardless of current" - that is incorrect. Those curves are specifically for 20A charges from 0% SoC. More current means higher voltage and less current means lower voltage at any given time as measured from 0%.

Lastly, curves are guidelines. They won't correlate 100% to reality.

3) If I have the installer increase my wire sizing to the recommended sizing, what charge current limit do you recommend, and why, for my system? It is a little confusing for me that the batteries report 58v and 100amps as the maximum charge parameters, but then the default is 15, or 20. That said, I'm assuming for the life of the battery, it is better to consistently charge at lower rates even if the maximum is significantly higher. I understand that. My plan would be to leave the charge current at a lower amount, 15-20 amps per battery, for most days of the year, but sometimes I will want the option to charge faster. I run two houses (2 electric ovens, 2 water heaters, 2 fridges, 2 washers, 2 dryers, 2 dishwashers), pool pump, hot tub pump all from this system, and the 2nd house is a full time Airbnb. Sometimes it will be worth it to me to reduce the life of the batteries in exchange for happy guests and family. While of course I want the system to last a good amount of years, I'm off grid and my primary objective is for the system to supply power and satisfy our needs/wants. I make the same conscious choices with our Tesla in the states, there are times I have run it down to 2% charge and times I supercharge it consistently up to 90-100%. My battery is degraded because of this, but the vehicle serves it purposes, and for me I prefer the benefits enough to justify the degradation.

First, I would size the wires exactly per the Victron manual recommendations. Between the lynx and the inverters EACH needs 2X 1/0 cables OR 1X 4/0 cable. Period. Based on 60A, the MPPT need at least 4awg.

Between the batteries and the lynx, the cables need to be sized according to the anticipated current. If you had each battery individually connected to the lynx (and you'd need more power-ins), 2awg would likely be fine, BUT since some are paralleled before connecting to the lynx, it depends.

A competent electrician should be able to size wires in their sleep, on ambien, while sleeping off a wicked night of binge drinking.

Next steps: I have asked the installer if he knows of the BMS current limit sets each batteries limit, and either way, what is the current limit for each battery (is it 15amp or 20amp). If he thinks the BMS current limit doesn't override the battery limit, and the batteries are currently limited to 15amps, then I will work with him to raise the current limit of each battery. If that successfully increases the charging current, then we will have finally solved this issue (after 13 months of living with this system, numerous attempts by the installer to fix it, numerous attempts by the supplier, attempt by an installer in Colorado trying to solve it through a video call and accessing my system remotely), and my researching it as much as I can. Don't worry, I haven't forgot about the wires be undersized, I will get the wires replaced with larger wires soon.

Similar to what you propose, here is how I would proceed:

First: Program BMS for 0.5C. This likely needs to be done individually on each battery. I suspect that if a single battery is at a lower value, that will force all of them to the lower value. If they are connected to some kind of management hub that passes settings to all, you may not need to do them individually. I chose 0.5C to try and eliminate the battery from engaging the boost function.

Second: Set DVCC for 53.25V and 120A charge current.

Since this is a 15S battery, the chargers being set to > 54V may further encourage triggering the voltage boost function and tapering current. 53.25 is 3.55V/cell, which is more than adequate for fast charges in most cases.

I also suspect that the batteries may have an SoC based taper as well, e.g., when charging at 50% SoC, there will be no restrictions, but as cell voltages rise and SoC increases, the rules for voltage boosting may become more "protective".

I would then experiment by raising current first and then voltage (up to 54V) to see if you can gain higher current charging.