Reading a bit more, I think I finally had that "light bulb" moment...

Voltage dictates which direction current will flow -- from high voltage to low voltage. The component receiving the current determines how much it will try to pull. The component supplying the current determines how much is available. Is that right?

So, considering my original scenario (with a few more details)...

I have a nearly empty 12V 18Ah battery at 12.9V. The safe charging rate is 0.2C or 3.6A.

I also have a 150V solar array at 10A going into an MPPT Controller (specifically, i.e. not a PWM Charge Controller). The MPPT controller will output 14.4V with 104A. Because the voltage of the battery is lower than that provided through the MPPT controller, the battery will draw current. However, the battery has very low impedance and therefore will likely pull all 104A available, which is unsafe.

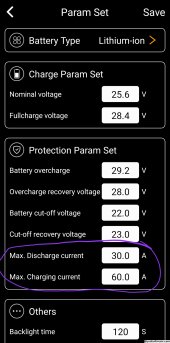

If my understanding above is correct, are there SCCs available that will limit current to a value I set? Or, what devices are available to place between the MPPT controller and the battery to limit the current available?

In this scenario, the SCC will have 3 charge settings. bulk, absorb, and float. Each setting does something different thing.

Bulk is the first mode, which (while not entirely accurate, I'll simplify) will match your current battery voltage (actually, slightly above it) and allow current to flow. The amount of current will be up to a setting you adjust, or the maximum that the SCC / array can accommodate (based on hardware limitations, available energy from the sun, etc.. Eventually, the internal resistance of the battery will "slow the charge" down as it becomes more fully charged, in which case the SCC will usually switch to absorb mode.

So yes, if your small battery can't handle the max charge rate available, you'll want to change the setting to limit it.

*Edited to add*:

BTW, when people talk about sizing a battery system for solar, they usually only think about "how much capacity do I need to provide X power for Y amount of time". However, they often forget the "What is the safe charge and discharge rate of the battery" as a criteria. Luckily though, the "provide X power over Y time" equation usually makes the bank big enough that the safe charge/discharge rates are within acceptable limits.

Using your example scenario above, there are other reasons you likely wouldn't want an overly small battery. As an example, I have a Schneider system, which recommends a minimum of 440ah of 48v batteries per inverter. One of the reasons is, as the inverter is ramped up to satisfy the power demands for loads, it's draing in available current from the SCC's. However, if a large load shuts off suddenly.. it takes a split second for that inverter (and SCCs) to "ramp down" accordingly. In the meantime, that excess power needs to go somewhere. Which is often seen as a large spike in charge current to the batteries. with a small battery (18ah, in your example). that would again far exceed the safe charge/discharge currents.