krby

Solar Enthusiast

Or... "How many watt hours are in a single LiFePO4 cell?"

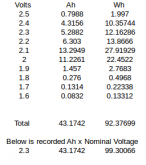

Before I bought my first big LFE, I had a spreadsheet of various options and in order to know if a given battery was "enough" for my use case AND to compare price per kilowatt-hour, I estimated the capacity of each battery in kWh. To do this I did:

amp hours x estimated_voltage / 1000 = kWh

I derated this value by some DoD percentage (80%, 85%, 90%) give me usable kWh, depending on how hard I wanted to be on the batteries.

My question is about that estimated_voltage value above. Originally I used 3.2V per cell "estimated voltage", because many people that sell these batteries list that as the nominal voltage (12.8/25.6/51.2 for 4/8/16S) respectively. Is that the right value? This page shows a chart where LFE spends most of its time at 3.3V. I know it's going to be complicated by the discharge current (for me this is going to be between 0.25C-0.5C in the worst case), but I'm just looking for an every number to plug in.

What got me thinking about this is that I over the weekend I did a couple of discharge tests (ended up about 0.3C) on a new 24V 200Ah LFE and I got about 4.45kWh of usable AC from a Victron MultiPlus, given the inverter overhead I saw during the discharge, that's about 4.9-5.1kWh of DC from the battery, which is more than I expected, I also had the charge voltage lower and discharge cut-off higher than the manufacturer recommended.

So, is 3.2V too conservative? Should I estimate with 3.25 or 3.3V per cell?

Before I bought my first big LFE, I had a spreadsheet of various options and in order to know if a given battery was "enough" for my use case AND to compare price per kilowatt-hour, I estimated the capacity of each battery in kWh. To do this I did:

amp hours x estimated_voltage / 1000 = kWh

I derated this value by some DoD percentage (80%, 85%, 90%) give me usable kWh, depending on how hard I wanted to be on the batteries.

My question is about that estimated_voltage value above. Originally I used 3.2V per cell "estimated voltage", because many people that sell these batteries list that as the nominal voltage (12.8/25.6/51.2 for 4/8/16S) respectively. Is that the right value? This page shows a chart where LFE spends most of its time at 3.3V. I know it's going to be complicated by the discharge current (for me this is going to be between 0.25C-0.5C in the worst case), but I'm just looking for an every number to plug in.

What got me thinking about this is that I over the weekend I did a couple of discharge tests (ended up about 0.3C) on a new 24V 200Ah LFE and I got about 4.45kWh of usable AC from a Victron MultiPlus, given the inverter overhead I saw during the discharge, that's about 4.9-5.1kWh of DC from the battery, which is more than I expected, I also had the charge voltage lower and discharge cut-off higher than the manufacturer recommended.

So, is 3.2V too conservative? Should I estimate with 3.25 or 3.3V per cell?

Last edited: