Light gives voltage.

Intensity of light gives heat/amperage.

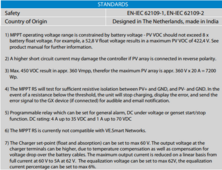

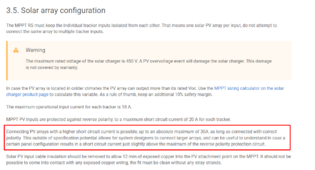

At sunrise, there is very little energy hitting the panels, but the light present is enough to nearly attain Voc, so heating will not significantly diminish the voltage. Even if this occurs for a fraction of a second, it may be long enough to blow the MPPT if Voc > MPPT max.

Important to be specific. "Rated" voltage may refer to Vmp as that's the voltage when the panel produces

rated power. Voc is typically 20% higher than Vmp.

This will be true, but it can't be counted on. The reality is also that the MPPT will likely attempt to start pulling current to produce power. It's when reality is at the edges, such as low temperature charge protection is active, and the battery refused to accept current, so the MPPT can't pull the voltage down.

A very cold windy day with intermittent clouds can actually keep your panels at ambient temperature AND cause a concentration of solar larger than normal (cloud edging). Voltage and current are near instantaneous, but heating is much slower.

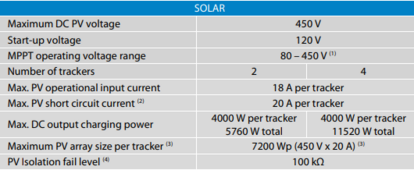

Bottom line... stop trying to find excuses to push your array voltage higher. Refer to this table:

There are calculators like this one made by @upnorthandpersonal which help you calculate PV array voltage and power for low temperatures based on the specific specifications of your panels. These are great tools and will give more precise...

diysolarforum.com

View attachment 201555

If the record low in your area is 0°F, you need to allow an 18% temperature margin on your MPPT, i.e., multiply array Voc by 1.18. If it exceeds MPPT voltage, it's bad.

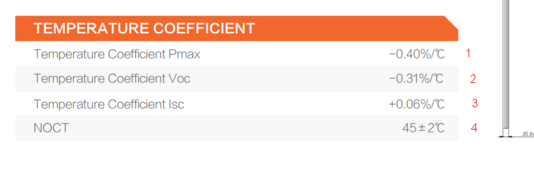

Alternative to the above table, you can run your own calculation using your panels' specific Voc temp coefficient.