The battery was fully charged and balanced.If you are looking for someone who has been consuming alcohol to help you, I'll do my best.

So watt hours give you a rough idea of how much energy is in your battery, and a rough idea of how much power your load will consume. Kinda like saying "I bought a 12 pack of brews for my uncle, and he was sober three hours later when he should have been plastered all night."

With watt hours, much like alcohol in my uncle, not all of them go to the place where they have an effect. Some watts (alcohol) gets consumed by the load (the brain), however, if the uncle in question is a 300lb Irish mobster, he probably metabolized a good share of it in other places in the circuit (his liver).

However, if you measure amp hours, you are measuring how much alcohol went down the hatch, and that gives you a true sense of how much the 12 pack was able to deliver.

Also, unlike a true 12 pack, if the cans aren't evenly full, a battery will only be able to deliver the amount that is in the least full can. So it is important to know that the battery cells are properly balanced when fully charged.

Of course, depending on how reputable the vendor is that you purchased the 12 pack from, they could have sold you some O'Douls. But I hope that is not the case.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Battery Overdischarge

- Thread starter Bob_Dole

- Start date

Vigo

Solar Addict

If a 1500wh battery was fully charged and balanced and you only got <820wh out of it, it's got a problem.

Of course, if you aren't tracking actual consumption somehow (battery bms, measurement in the charge controller, etc) then we can only assume that what should have been happening is what actually was happening.

Like, if you just charge a lithium battery until the bms disconnects it but didn't track what went into it, it might be that the bms disconnected it because a bad cell hit too high a voltage and all the other ones are 'half full'. In which case you can only drain maybe half rated capacity out of it before the bms disconnects it again. If the bms has communication you can see cell voltages and track how many amp hours went into it and actually 'know' that it is balanced and full. You said it was balanced and full so giving that the benefit of the doubt we can move on to other possibilities.

One possible issue is the ~820w number you assigned to the load might be inaccurate. If it was based on something like taking the 'rated amps' on a sticker on the side of an appliance, and multiplying by the nominal voltage (say 120v) to get approximate Watts of load, that can be WAY different from reality depending on what type of device it is because some fluctuate hugely based on conditions, and some don't. But if the number was 'calculated' by your charge controller or your bms it's likely to be fairly accurate, or if you measured volts and amps in quick succession and did the math yourself, also likely to be fairly accurate.

Another possible issue is voltage drops due to poor connections dissipating watts as heat. I think it's extremely unlikely for that to account for the entire problem here because if something was making the other 700w for most of an hour as heat, i think you would notice! But it may account for some of it, and depending on where the volts and amps are measured, the screens of the bms or charge controller may or may not include those Watts. If there was an issue between the cells and the bms the bms would not capture it, but if it was somewhere between the bms and the load, it would, etc.

So i guess the next question is, what is this actual load and how did you determine the 820w number?

We may have to circle back around to how you determined 'fully charged and balanced' if no smoking gun is ever found downstream of the battery..

Of course, if you aren't tracking actual consumption somehow (battery bms, measurement in the charge controller, etc) then we can only assume that what should have been happening is what actually was happening.

Like, if you just charge a lithium battery until the bms disconnects it but didn't track what went into it, it might be that the bms disconnected it because a bad cell hit too high a voltage and all the other ones are 'half full'. In which case you can only drain maybe half rated capacity out of it before the bms disconnects it again. If the bms has communication you can see cell voltages and track how many amp hours went into it and actually 'know' that it is balanced and full. You said it was balanced and full so giving that the benefit of the doubt we can move on to other possibilities.

One possible issue is the ~820w number you assigned to the load might be inaccurate. If it was based on something like taking the 'rated amps' on a sticker on the side of an appliance, and multiplying by the nominal voltage (say 120v) to get approximate Watts of load, that can be WAY different from reality depending on what type of device it is because some fluctuate hugely based on conditions, and some don't. But if the number was 'calculated' by your charge controller or your bms it's likely to be fairly accurate, or if you measured volts and amps in quick succession and did the math yourself, also likely to be fairly accurate.

Another possible issue is voltage drops due to poor connections dissipating watts as heat. I think it's extremely unlikely for that to account for the entire problem here because if something was making the other 700w for most of an hour as heat, i think you would notice! But it may account for some of it, and depending on where the volts and amps are measured, the screens of the bms or charge controller may or may not include those Watts. If there was an issue between the cells and the bms the bms would not capture it, but if it was somewhere between the bms and the load, it would, etc.

So i guess the next question is, what is this actual load and how did you determine the 820w number?

We may have to circle back around to how you determined 'fully charged and balanced' if no smoking gun is ever found downstream of the battery..

Thank you. Great feedback. I appreciate it.If a 1500wh battery was fully charged and balanced and you only got <820wh out of it, it's got a problem.

Of course, if you aren't tracking actual consumption somehow (battery bms, measurement in the charge controller, etc) then we can only assume that what should have been happening is what actually was happening.

Like, if you just charge a lithium battery until the bms disconnects it but didn't track what went into it, it might be that the bms disconnected it because a bad cell hit too high a voltage and all the other ones are 'half full'. In which case you can only drain maybe half rated capacity out of it before the bms disconnects it again. If the bms has communication you can see cell voltages and track how many amp hours went into it and actually 'know' that it is balanced and full. You said it was balanced and full so giving that the benefit of the doubt we can move on to other possibilities.

One possible issue is the ~820w number you assigned to the load might be inaccurate. If it was based on something like taking the 'rated amps' on a sticker on the side of an appliance, and multiplying by the nominal voltage (say 120v) to get approximate Watts of load, that can be WAY different from reality depending on what type of device it is because some fluctuate hugely based on conditions, and some don't. But if the number was 'calculated' by your charge controller or your bms it's likely to be fairly accurate, or if you measured volts and amps in quick succession and did the math yourself, also likely to be fairly accurate.

Another possible issue is voltage drops due to poor connections dissipating watts as heat. I think it's extremely unlikely for that to account for the entire problem here because if something was making the other 700w for most of an hour as heat, i think you would notice! But it may account for some of it, and depending on where the volts and amps are measured, the screens of the bms or charge controller may or may not include those Watts. If there was an issue between the cells and the bms the bms would not capture it, but if it was somewhere between the bms and the load, it would, etc.

So i guess the next question is, what is this actual load and how did you determine the 820w number?

We may have to circle back around to how you determined 'fully charged and balanced' if no smoking gun is ever found downstream of the battery..

The 820 watts was read off of the output of my inverter. And I do know my device was commanding 6-7 amps at 120v.

I guess I am assuming the battery is balanced, it is a brand new, unused battery. I charged it with my solar after install and the charge controller put about 900 watt h in to it before showing the battery at 14.6 volts and charging was reduced to trickle.

The battery does not have communication.

So the battery at bms shut off with load removed showed 12.5 volts. So that should be near full discharge correct? So using my renogy app the next few days I can track how many Ah/Wh are put in to the battery. If that number is not close to the battery rated 120 Ah/ 1500wh it would indicate a battery issue?

I did not notice any heat or abnormal behavior in my set up.

Ampster

Renewable Energy Hobbyist

12.5 volts is 3.125;Volts per cell which is not close to full discharge of a LFP cell at 2.5 Volts.So the battery at bms shut off with load removed showed 12.5 volts. So that should be near full discharge correct?

Vigo

Solar Addict

Thank you. Great feedback. I appreciate it.

The 820 watts was read off of the output of my inverter. And I do know my device was commanding 6-7 amps at 120v.

I guess I am assuming the battery is balanced, it is a brand new, unused battery. I charged it with my solar after install and the charge controller put about 900 watt h in to it before showing the battery at 14.6 volts and charging was reduced to trickle.

The battery does not have communication.

So the battery at bms shut off with load removed showed 12.5 volts. So that should be near full discharge correct? So using my renogy app the next few days I can track how many Ah/Wh are put in to the battery. If that number is not close to the battery rated 120 Ah/ 1500wh it would indicate a battery issue?

I did not notice any heat or abnormal behavior in my set up.

Gotcha. I have 3 of the cheaper pre-built 12v lifepo4s with no bms communication as well, so no judgment there. With the inverter showing 820w and nothing getting hot that number is in the ballpark, it just doesn't include the efficiency losses of the inverter or wiring, but you're still probably talking <900w including those.

So that leaves a battery issue. Taking 900wh to hit 14.6, by itself, doesn't suggest anything bad yet, but it doesn't include the starting point. If the starting voltage before that charge was down in the 12s (or lower!) that would suggest that the ~900wh was almost the total capacity of the battery, which WOULD be bad news. This is just because 12v lifepo4 has already used most of its capacity before it drops from the 13s to the 12s so charging from 12s to 14.6 and only taking 900wh would suggest that's basically all there is.

Most 12v lifepo4 bms will come with a pre-set low voltage shutoff point of 10.0 or 10.5v. BUT, they will also disconnect when any individual cell hits too low of a voltage, or if there is a high temp problem. The fact that the voltage was already 12.5 after it reconnected is a bad sign because it means either A. a single cell hit too low of a voltage while others were still holding a lot of charge, or B. something in there is getting way too hot. Usually a bad cell is a bit of both because it will have higher internal resistance which means it will get hotter while doing less.

The temp thing would be somewhat easy to rule out because if you can pull a high load on it again and happen to be near it when it disconnects you could just feel around for temp on the case as anything hot enough to shut it down on the inside would probably make it at least warm somewhere on the outside. Could always have a faulty temp switch or BMS built in.. THAT would be inconvenient because it would be hard to diagnose or prove without getting inside and it's probably a sealed unit.

So i'm guessing the more likely thing is it has some kind of cell issue. Without being able to communicate with the bms OR being able to open the case at all, it's difficult to diagnose it any further than that. I would try charging it again, draining it until it disconnects again, look at the numbers, look at the heat, and if it hasn't stayed cool and gotten pretty close to what it says it will do in Wh, i would try to get it warrantied.

If that turns out to be the case it's a point in favor of either or both A. get a bms with communication and B. get a bolted-together case which can be opened for user diagnosis and repair. Both option drive the cost up, and like i said i've bought sealed non-communicating units 3 times so it's not like im saying do what i say, not what i do. But in my case ive been lucky enough to see pretty much the full capacity from my cheap pre-built batteries and haven't had any issues. Still, in all likelihood any future lifepo4 i buy will likely be DIY or at least user-serviceable designs.

Would it hit 10 or 10.5 volts at bms under load and then when load is removed the battery would read 12.6?Gotcha. I have 3 of the cheaper pre-built 12v lifepo4s with no bms communication as well, so no judgment there. With the inverter showing 820w and nothing getting hot that number is in the ballpark, it just doesn't include the efficiency losses of the inverter or wiring, but you're still probably talking <900w including those.

So that leaves a battery issue. Taking 900wh to hit 14.6, by itself, doesn't suggest anything bad yet, but it doesn't include the starting point. If the starting voltage before that charge was down in the 12s (or lower!) that would suggest that the ~900wh was almost the total capacity of the battery, which WOULD be bad news. This is just because 12v lifepo4 has already used most of its capacity before it drops from the 13s to the 12s so charging from 12s to 14.6 and only taking 900wh would suggest that's basically all there is.

Most 12v lifepo4 bms will come with a pre-set low voltage shutoff point of 10.0 or 10.5v. BUT, they will also disconnect when any individual cell hits too low of a voltage, or if there is a high temp problem. The fact that the voltage was already 12.5 after it reconnected is a bad sign because it means either A. a single cell hit too low of a voltage while others were still holding a lot of charge, or B. something in there is getting way too hot. Usually a bad cell is a bit of both because it will have higher internal resistance which means it will get hotter while doing less.

The temp thing would be somewhat easy to rule out because if you can pull a high load on it again and happen to be near it when it disconnects you could just feel around for temp on the case as anything hot enough to shut it down on the inside would probably make it at least warm somewhere on the outside. Could always have a faulty temp switch or BMS built in.. THAT would be inconvenient because it would be hard to diagnose or prove without getting inside and it's probably a sealed unit.

So i'm guessing the more likely thing is it has some kind of cell issue. Without being able to communicate with the bms OR being able to open the case at all, it's difficult to diagnose it any further than that. I would try charging it again, draining it until it disconnects again, look at the numbers, look at the heat, and if it hasn't stayed cool and gotten pretty close to what it says it will do in Wh, i would try to get it warrantied.

If that turns out to be the case it's a point in favor of either or both A. get a bms with communication and B. get a bolted-together case which can be opened for user diagnosis and repair. Both option drive the cost up, and like i said i've bought sealed non-communicating units 3 times so it's not like im saying do what i say, not what i do. But in my case ive been lucky enough to see pretty much the full capacity from my cheap pre-built batteries and haven't had any issues. Still, in all likelihood any future lifepo4 i buy will likely be DIY or at least user-serviceable designs.

I will let you know what goes in to it after it is charged via solar. I also picked up a 5amp charger, after the solar/renogy record what gets put in I may hook it up to hard power and see what happens.

Scratch that, it's gonna be cloudy for three days in a row here in AZ. Gonna charge with the wall power charger and see if the behavior is different.Would it hit 10 or 10.5 volts at bms under load and then when load is removed the battery would read 12.6?

I will let you know what goes in to it after it is charged via solar. I also picked up a 5amp charger, after the solar/renogy record what gets put in I may hook it up to hard power and see what happens.

Vigo

Solar Addict

With lifepo4 and good wiring it is basically impossible as lifepo4 has such a low internal resistance that voltage doesn't sag under load to any great extent. Usually in the low tenths of a volt at most, never by 2+ volts as far as i have seen.Would it hit 10 or 10.5 volts at bms under load and then when load is removed the battery would read 12.6?

So the only possibility to watch out for there is bad connections causing a ~2v voltage drop between battery and inverter while under load (assuming the inverter has its own low voltage cutoff, which they usually do). That would be easy to test for because it should be present all the time (as long as nothing is physically moved/changed) so you should be able to put another 800w load on it and expect to get the same voltage drops in the same areas. The actual voltage drop is proportional to current so it wouldn't drop 2v all the time, just saying if it EVER dropped 2v while doing 800w, and you haven't physically disturbed anything, it should do the exact same thing again under 800w. So load it up to 800+w again, if the voltage at the battery and voltage at the inverter are nearly the same, you can rule out that possibility. Which would leave you back at the battery. It's worth checking, though.

I didn't do a long pull on the battery yesterday but.... After being fully charge on shore power the battery was reading 13.4V off of the inverter display. I turned on the 820 watt load and saw the battery voltage drop to 12.6V with the load applied. Ran the load for about 20 minutes and took the load off. After removing the load the inverter showed battery voltage of 13.1V.With lifepo4 and good wiring it is basically impossible as lifepo4 has such a low internal resistance that voltage doesn't sag under load to any great extent. Usually in the low tenths of a volt at most, never by 2+ volts as far as i have seen.

So the only possibility to watch out for there is bad connections causing a ~2v voltage drop between battery and inverter while under load (assuming the inverter has its own low voltage cutoff, which they usually do). That would be easy to test for because it should be present all the time (as long as nothing is physically moved/changed) so you should be able to put another 800w load on it and expect to get the same voltage drops in the same areas. The actual voltage drop is proportional to current so it wouldn't drop 2v all the time, just saying if it EVER dropped 2v while doing 800w, and you haven't physically disturbed anything, it should do the exact same thing again under 800w. So load it up to 800+w again, if the voltage at the battery and voltage at the inverter are nearly the same, you can rule out that possibility. Which would leave you back at the battery. It's worth checking, though.

So 1/3 of 820 is 273W. 1500 - 273 = 1227. 1227/1500 = 80% of charge should be left if the battery was at full capacity. 13.1V after load removal seems low, correlates to about a 40% state of charge correct? Or am I incorrect?

I did pick up another battery of the same type and capacity. I want to swap them and see if I am seeing the same potential capacity issue.

Anyone's thoughts?

Vigo

Solar Addict

Well, if you didn't conveniently have another battery of the same type and capacity I'd say you might want to get a little more precise with the numbers before making big decisions.. I'm all for ballparking numbers but when you start doing percentages and you are ballparking from ballparking from ballparked numbers you can get into some WAY off results. But since you DO have another identical battery, easiest thing is not improve your tracking/accuracy, easiest thing is just swap and see what happens! We can always aim higher on accuracy after that. But your basic thought process on that math is valid, other than the 'tolerance stackup' issue with the assumed numbers.

I'm surprised you're seeing a .8v drop AT the battery (im assuming when you say battery voltage it means you are reading it at the battery) and that alone would make me suspicious of the health or quality of the battery..

If you are taking that number from closer to the inverter, it wouldn't surprise me if the TOTAL voltage drop of the battery itself (which i would have guessed to be maybe half what you're seeing) AND the voltage drop of the wiring between battery and inverter, totalled 0.8v under that kind of load. It's probably a 70-80a current and unless your wiring is pretty hefty you're going to drop some tenths at 70+A.

So to clarify, where are you taking this voltage reading? And a pic of the setup might help because depending on the inverter power cabling is split off from the cabling going to the charge controller, it would change which voltage drops would be reflected at each location.

I'm surprised you're seeing a .8v drop AT the battery (im assuming when you say battery voltage it means you are reading it at the battery) and that alone would make me suspicious of the health or quality of the battery..

If you are taking that number from closer to the inverter, it wouldn't surprise me if the TOTAL voltage drop of the battery itself (which i would have guessed to be maybe half what you're seeing) AND the voltage drop of the wiring between battery and inverter, totalled 0.8v under that kind of load. It's probably a 70-80a current and unless your wiring is pretty hefty you're going to drop some tenths at 70+A.

So to clarify, where are you taking this voltage reading? And a pic of the setup might help because depending on the inverter power cabling is split off from the cabling going to the charge controller, it would change which voltage drops would be reflected at each location.

The voltage reading is what the inverter display is showing as battery voltage. The inverter is hooked directly to the battery terminals.Well, if you didn't conveniently have another battery of the same type and capacity I'd say you might want to get a little more precise with the numbers before making big decisions.. I'm all for ballparking numbers but when you start doing percentages and you are ballparking from ballparking from ballparked numbers you can get into some WAY off results. But since you DO have another identical battery, easiest thing is not improve your tracking/accuracy, easiest thing is just swap and see what happens! We can always aim higher on accuracy after that. But your basic thought process on that math is valid, other than the 'tolerance stackup' issue with the assumed numbers.

I'm surprised you're seeing a .8v drop AT the battery (im assuming when you say battery voltage it means you are reading it at the battery) and that alone would make me suspicious of the health or quality of the battery..

If you are taking that number from closer to the inverter, it wouldn't surprise me if the TOTAL voltage drop of the battery itself (which i would have guessed to be maybe half what you're seeing) AND the voltage drop of the wiring between battery and inverter, totalled 0.8v under that kind of load. It's probably a 70-80a current and unless your wiring is pretty hefty you're going to drop some tenths at 70+A.

So to clarify, where are you taking this voltage reading? And a pic of the setup might help because depending on the inverter power cabling is split off from the cabling going to the charge controller, it would change which voltage drops would be reflected at each location.

hankcurt

Solar Enthusiast

The discharge voltage curve is so flat with lithium iron phosphate batteries that the voltage drop in the leads to the inverter become significant. The only way to really know what the battery voltage is under load is to measure it directly at the battery posts with a volt meter.The voltage reading is what the inverter display is showing as battery voltage.

This may sound redundant to you, because you noted that the inverter leads are connected directly to the battery also. The difference is that the inverter leads are carrying the current to the inverter, and thus the leads are dropping some of the voltage due to the resistance of the copper wire. Whereas the leads of a volt meter are carrying almost no current, so that gives you a more accurate reading of the actual battery voltage.

The discharge voltage curve is so flat with lithium iron phosphate batteries that the voltage drop in the leads to the inverter become significant. The only way to really know what the battery voltage is under load is to measure it directly at the battery posts with a volt meter.

This may sound redundant to you, because you noted that the inverter leads are connected directly to the battery also. The difference is that the inverter leads are carrying the current to the inverter, and thus the leads are dropping some of the voltage due to the resistance of the copper wire. Whereas the leads of a volt meter are carrying almost no current, so that gives you a more accurate reading of the actual battery voltage.

Under the same load the inverter display reads 12.6V and with a multimeter at the battery terminals I was reading 13.11V. Prior to applying the load the battery was reading 13.6 on the inverter display (I forgot to use multimeter prior, but without a load the inverter display should be pretty close correct?)

hankcurt

Solar Enthusiast

Without a load on the inverter, if the inverter volt meter is accurate, it should be pretty close to the actual battery voltage.

So some quick math:

The battery claimed capacity of 1500wh ÷ 12.8v = 117.87, so you probably have cells with around 115 to 120ah of capacity.

Your load of 820 watts ÷ 12.6v at the inverter = 65 amps of current required.

This indicates you were drawing down the battery at about 0.5C (the C rate is the rate of discharge to empty the battery in one hour)

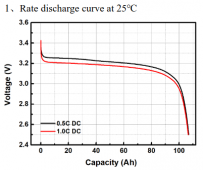

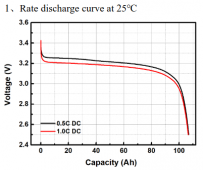

So if we reference a chart below that I pulled from an EVE 105ah battery cell sheet, we can find the expected cell voltage at 0.5 C rate with a nearly full battery (the left end of the chart) is less than 3.3v, and slightly more than 3.25v.

Dividing your battery voltage of 13.11 while under load by 4, because the battery contains four cells in series, we get 3.277v per cell. So by this measure, the cell voltage is what would be expected under that load.

The other thing this chart shows is how difficult it is to determine the remaining battery capacity by using voltage. You can see that the 0.5C discharge line only comes down to 3.2v when the battery has been 60% discharged, while on the 1.0C discharge line the voltage reaches the same level after only discharging 20% of the battery capacity.

It also shows that you can't really consider most of the battery capacity used until the voltage under load goes below 3.0v per cell, which is 12v for the total battery.

So some quick math:

The battery claimed capacity of 1500wh ÷ 12.8v = 117.87, so you probably have cells with around 115 to 120ah of capacity.

Your load of 820 watts ÷ 12.6v at the inverter = 65 amps of current required.

This indicates you were drawing down the battery at about 0.5C (the C rate is the rate of discharge to empty the battery in one hour)

So if we reference a chart below that I pulled from an EVE 105ah battery cell sheet, we can find the expected cell voltage at 0.5 C rate with a nearly full battery (the left end of the chart) is less than 3.3v, and slightly more than 3.25v.

Dividing your battery voltage of 13.11 while under load by 4, because the battery contains four cells in series, we get 3.277v per cell. So by this measure, the cell voltage is what would be expected under that load.

The other thing this chart shows is how difficult it is to determine the remaining battery capacity by using voltage. You can see that the 0.5C discharge line only comes down to 3.2v when the battery has been 60% discharged, while on the 1.0C discharge line the voltage reaches the same level after only discharging 20% of the battery capacity.

It also shows that you can't really consider most of the battery capacity used until the voltage under load goes below 3.0v per cell, which is 12v for the total battery.

Similar threads

- Replies

- 4

- Views

- 427

- Replies

- 32

- Views

- 988

- Replies

- 9

- Views

- 271

- Replies

- 38

- Views

- 1K

- Replies

- 27

- Views

- 2K