Am trying to get my head around any impact on the total energy that can be usefully extracted from an LFP battery rack if one increases the instantaneous maximum discharge rate, but whilst still consuming the same amount of energy over a given time period.

Or, to put it a bit clearer with an example; if one was to discharge say a 280Ah / 14.3kWh battery, via an inverter, with a load of 2kW for an hour and then, afterwards, a 3kW load for an hour, would that result in the batteries being depleted faster than running both loads in parallel at a rate of 5kW. i.e. is it better to (say) cook and do washing in series or parallel with everything else equal?

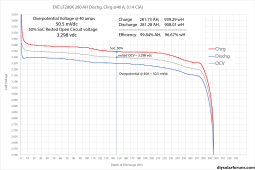

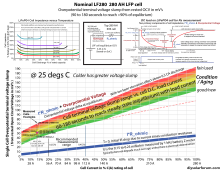

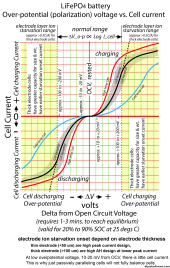

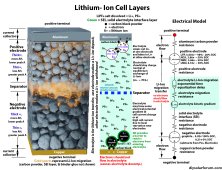

Obviously the Ah rating of the battery is constant, but the Wh rating is dependent on voltage as well as current. And drawing a larger load will result in more current being drawn whilst simultaneously decreasing the voltage of the cells. In addition to that, internal resistance of the cells and the resistance of the d.c. cable between battery and inverter will cause more heat to be generated = lost energy (well not really really lost, just converted into energy that is not useful to me). But that extra heat is for less time.

What I've hit a mental block on is whether "5kWh used for 1 hour + 1 hour with inverter idle" would result in more, less or the same energy consumed from the batteries compared to using 5kWh over 2 hours?

Or, to put it a bit clearer with an example; if one was to discharge say a 280Ah / 14.3kWh battery, via an inverter, with a load of 2kW for an hour and then, afterwards, a 3kW load for an hour, would that result in the batteries being depleted faster than running both loads in parallel at a rate of 5kW. i.e. is it better to (say) cook and do washing in series or parallel with everything else equal?

Obviously the Ah rating of the battery is constant, but the Wh rating is dependent on voltage as well as current. And drawing a larger load will result in more current being drawn whilst simultaneously decreasing the voltage of the cells. In addition to that, internal resistance of the cells and the resistance of the d.c. cable between battery and inverter will cause more heat to be generated = lost energy (well not really really lost, just converted into energy that is not useful to me). But that extra heat is for less time.

What I've hit a mental block on is whether "5kWh used for 1 hour + 1 hour with inverter idle" would result in more, less or the same energy consumed from the batteries compared to using 5kWh over 2 hours?