12VoltInstalls

life passes by too quickly to not live in freedom

I installed two Btr Power 140Ah 12V in parallel a week or do ago. No problems other than cloudy days which isn’t a failure of course. Charged fine with 4S2P 100W panels through the existing 1012LV-MK. Previously had charged 36+ hours to 14.5V to reach zero amps with a 10A voltage controlled charger. Adjusted to 14.6 and it ran ~2 minutes to zero amps.

Planned migration to a 4215AN Triron Xtra led me to pull the solar cables from the 1012LV and installed to 4215AN. It charged for a while and then would cease charging at (usually) 12.9V. Reinstalled panel input to the 1012- no problems. Charged fine.

After reading here and remembering this experience as well as reading another thread and many more like this I lowered all the settings and against my sensibilities set the high-voltage disconnect to 15V.

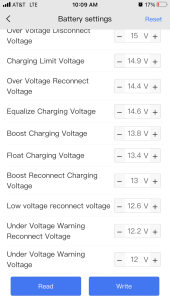

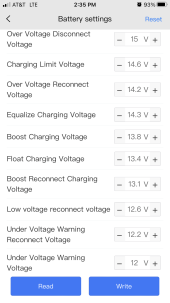

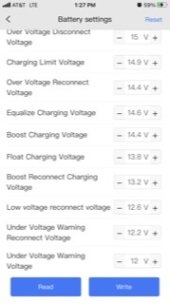

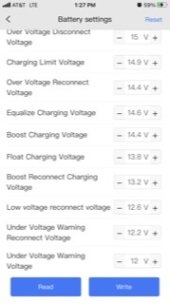

My settings are:

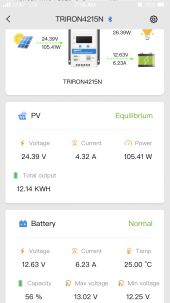

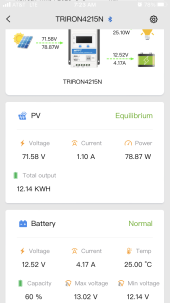

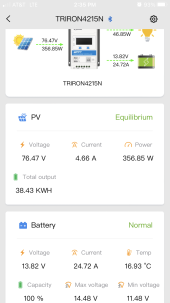

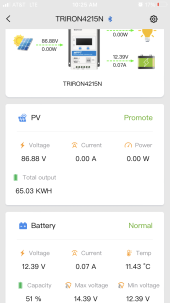

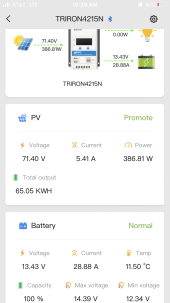

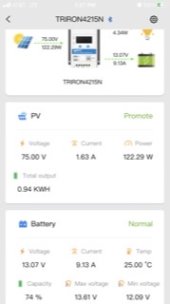

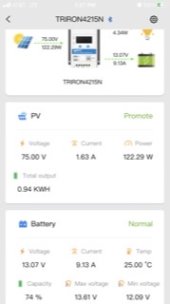

…which is yielding:

Note that it did shut down charging once today, but it resumed charging without me turning off the panels and turning them back on. Which was what I had to do yesterday as noted at the end of this thread.

As of this moment it’s been vacillating around ~13.1V for a number of hours.

My conclusions follow.

Please question them.

Related to past high-voltage cutouts with full sun and lead acid batteries I learned to assume that the Epever coding has a flaw that causes disabling of charging when it has voltage spikes from the panels that it allows because it doesn’t throttle them fast enough.

Using that logic, I wildhat guessed that the BMS’s were shutting down from high voltage. So I set the lower charge parameters to make a bigger hvsd differential and I imagined that I would also be keeping it off the Btrpower bms rev limiter. My numbers were influenced by an old post by @mikefitz - thank you.

I probably can’t discover what it will do in full sun for a few days because there isn’t any, but according to seeing that I can now charge over 12.9V settled I’m hoping that it’s problem solved.

Another issue that others mentioned was possible cell imbalance triggering the BMS’s. Batteries last check with my Klein vom yesterday were both 12.91V. Btrpower batteries have no comm so I can’t prove it but I think they are individually ok as well as balanced with each other.

Finally, a poor connection was inquired of. However, in testing Ohms from each battery’s pos and neg i don’t see that. Voltage is the same, and each battery is individually wired to a busbar through equal-length 48” cables; measuring between both the pos battery bolts and both neg battery bolts (which are connected through the busbar) I get zero ohms- 0.00 Ohms. So there’s no issues in my small mind.

Does this and my settings sound reasonable to others? Or just me?

Planned migration to a 4215AN Triron Xtra led me to pull the solar cables from the 1012LV and installed to 4215AN. It charged for a while and then would cease charging at (usually) 12.9V. Reinstalled panel input to the 1012- no problems. Charged fine.

After reading here and remembering this experience as well as reading another thread and many more like this I lowered all the settings and against my sensibilities set the high-voltage disconnect to 15V.

My settings are:

…which is yielding:

Note that it did shut down charging once today, but it resumed charging without me turning off the panels and turning them back on. Which was what I had to do yesterday as noted at the end of this thread.

As of this moment it’s been vacillating around ~13.1V for a number of hours.

My conclusions follow.

Please question them.

Related to past high-voltage cutouts with full sun and lead acid batteries I learned to assume that the Epever coding has a flaw that causes disabling of charging when it has voltage spikes from the panels that it allows because it doesn’t throttle them fast enough.

Using that logic, I wildhat guessed that the BMS’s were shutting down from high voltage. So I set the lower charge parameters to make a bigger hvsd differential and I imagined that I would also be keeping it off the Btrpower bms rev limiter. My numbers were influenced by an old post by @mikefitz - thank you.

I probably can’t discover what it will do in full sun for a few days because there isn’t any, but according to seeing that I can now charge over 12.9V settled I’m hoping that it’s problem solved.

Another issue that others mentioned was possible cell imbalance triggering the BMS’s. Batteries last check with my Klein vom yesterday were both 12.91V. Btrpower batteries have no comm so I can’t prove it but I think they are individually ok as well as balanced with each other.

Finally, a poor connection was inquired of. However, in testing Ohms from each battery’s pos and neg i don’t see that. Voltage is the same, and each battery is individually wired to a busbar through equal-length 48” cables; measuring between both the pos battery bolts and both neg battery bolts (which are connected through the busbar) I get zero ohms- 0.00 Ohms. So there’s no issues in my small mind.

Does this and my settings sound reasonable to others? Or just me?

Last edited: