scott harris

New Member

- Joined

- Jan 1, 2020

- Messages

- 92

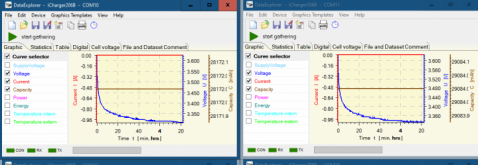

I am charging each of my 280 AH cells to 3.6 volts in order to do a capacity test. I use a non-programable bench charger capable of 10 amps. Setting the power supply at 3.6 volts and waiting for the current to drop off takes forever once the charger is in CV mode and the current decreases. I'm thinking of building a voltage sensing circuit which would turn the charger off once the voltage across the battery terminals reaches 3.6 volts, and then just crank the voltage on the power supply to 4.0 volts and let the cell charge at 10 amps until the voltage reaches 3.6 volts.

In other words, would be charging at 0.03C until the cell reaches 3.6 volts and then stop?

Does anyone see a problem with this method?

In other words, would be charging at 0.03C until the cell reaches 3.6 volts and then stop?

Does anyone see a problem with this method?