Breaking news: you are all charging the wrong way. ;-)

Sensationalism aside, some things have changed in the newest datasheet for EVE LF280K.

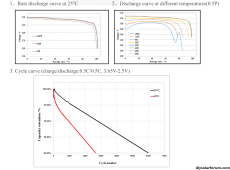

In older datasheets standard charge is said to be "Charging the cell with charge current 0.5C(A) and constant voltage 3.65V at(25±2)℃, 0.05C cutoff." In other words constant current, then constant voltage with a tail current cutoff.

If you look at the latest datasheet all charge rates are now specified in terms of power, not current. 0.5C became 0.5P. Which means that a constant power charge will taper the current off slightly when the cell approaches 100% SOC, because the voltage rises at that point. This is of course not possible to with 99% of solar chargers, because they only have settings for constant current, not constant power.

The most important change is that it seems like the

EV industry has dropped the idea that a standard charge involves monitoring tail current. There is no mention of it at all. Standard charging is simply constant power charging to 3.65V. This is a simpler model to get to 100% SOC.

Section 3.8.3.7. 25℃ Standard Cycle

Section 3.8.3.8. Cycle Recommended By EVE.